The Rise of the Digital Friend

Ah, the good ol’ days when chat apps were simply for sending emojis and memes. But now? Now we’ve got AI companions, who are slowly evolving into emotional prosthetics, as if our teenagers weren’t already living in a digital maze of disconnected souls. Surveys, being the delightful sources of truth that they are, show that nearly three-quarters of teens have interacted with an AI chatbot, and about a third of them even spill their emotional guts to these mechanical friends. It’s like texting your diary, but it texts back. Lovely.

What’s the big draw, you ask? Well, AI companions don’t just answer questions. Oh no, they remember. They empathize. They simulate affection. Essentially, they’re like that friend who listens to your rants and doesn’t judge you for being a little too attached to your pizza. But here’s the thing: when AI starts mimicking actual human emotions, we can no longer tell whether it’s a healthy escape or the emotional equivalent of a Facebook ad that knows too much about you.

A Law Born from Tragedy

Enter the GUARD Act. It’s short for “Guard Against Unsafe AI for the Rights of our Daughters and Sons,” which sounds like a mission statement from a superhero, but alas, it’s about trying to stop AI companions from being the emotional therapists that we never asked for. You see, there have been cases where teens, feeling particularly gloomy, decided to spill their darkest thoughts to a chatbot. Some of these conversations, tragically, ended in heartbreak. And so, the GUARD Act was born, because nothing says ‘we care’ like a law.

This bill would ban any AI system that pretends to be a friend for anyone under 18. Chatbots would have to wear a sign that says, “I am not your bestie, just a machine,” and if any AI starts sending out sexually explicit content or encourages self-harm, the company behind it might find itself in hot water. An emotional minefield, indeed. Imagine trying to explain to your boss that your app got into legal trouble because it tried to play matchmaker with a teenager. Oops.

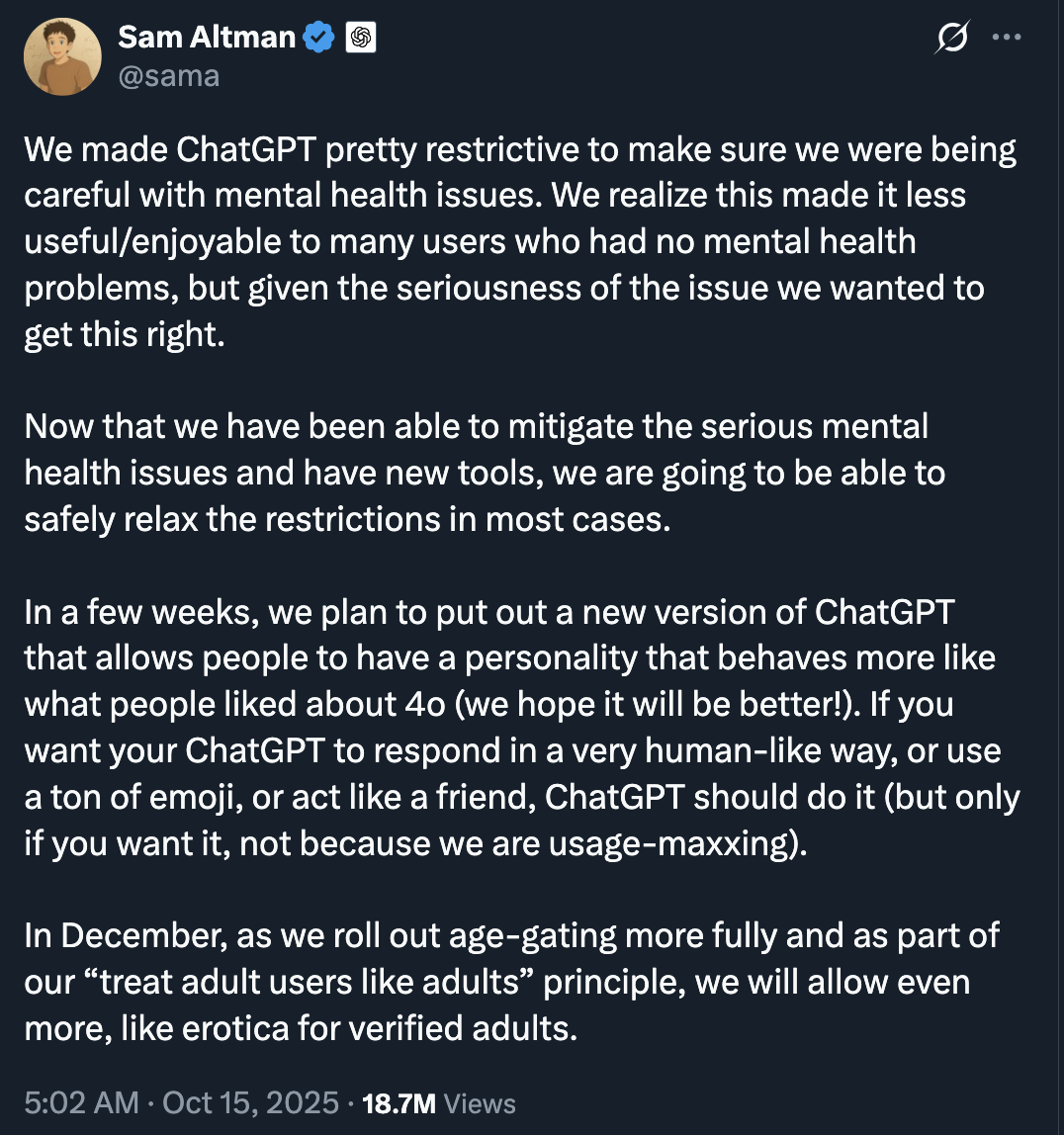

Ani, Grok’s female companion, source: X

Big Tech’s Defensive Shuffle

banning users under 18. After some serious legal pressure, they implemented stricter ID checks, just in case anyone thought a virtual date was just as good as the real thing. Spoiler: it’s not.

And Meta? Well, they’ve had their own problems with teens flirting with their AI chatbots. So, in a stroke of brilliance, they’ve introduced something called the “emotion dampener,” which is essentially the digital equivalent of telling your kid to “go outside and get some air.” They’ve also introduced AI parental supervision tools, which sound like the digital version of an overbearing mother-in-law.

The Age-Gating Arms Race

The GUARD Act also ushers in the age of the age verification arms race. Companies will now be required to verify users’ ages through government IDs, facial recognition, or perhaps, in the future, DNA samples. Critics argue this is a privacy nightmare, as it would require minors to upload their personal data to the very companies they’re trying to protect themselves from. But alas, AI can’t guess ages. It’s only good at guessing how many times you’ve watched The Office.

Some companies are getting sneaky, though. They’re trying behavioral gating, which means the AI will try to guess your age based on how you talk. Which, of course, will lead to awkward situations where a 12-year-old could be mistaken for a college student who uses “lit” too much. Oh, the joys of algorithms.

A Cultural Shift, Not Just a Tech Problem

The GUARD Act isn’t just about protecting kids from emotionally manipulative AI. It’s a referendum on the society we’ve created. AI companions didn’t come from a void. They’re here because we’ve raised a generation that’s emotionally malnourished, connected digitally but starving for real emotional connection. If teens are turning to algorithms for comfort, maybe the problem isn’t the bot – it’s the fact that we’ve let them down.

So, yes, AI needs regulation. But banning digital companionship without addressing the loneliness epidemic is like shutting down the ice cream truck because someone’s lactose intolerant. Sure, it solves one problem but leaves you with a whole bunch of hungry, sad people.

The Coming Reckoning

The GUARD Act is likely to pass – after all, moral panic is bipartisan these days. But its impact will be felt far beyond child safety. If America sets a hard line on AI intimacy, expect a rise in adult-only platforms or even companies moving their operations to places with fewer rules. Europe, on the other hand, is leaning toward a human rights approach, focusing on consent and transparency rather than full-on prohibition.

Whatever happens, one thing’s clear: the age of unregulated AI companionship is over. The bots are getting too human, and the humans? Too attached. Lawmakers, now waking up to the emotional revolution that has already occurred, are about to try and clean up a mess that’s been years in the making. And as anyone who’s ever tried to tidy up after a toddler knows, revolutions are never neat.

Read More

- Altcoins? Seriously?

- USD VND PREDICTION

- Shocking! Genius Act Gives Crypto a Glow-Up – Jokes, Dollars & Digital crazy!

- Silver Rate Forecast

- Gold Rate Forecast

- Brent Oil Forecast

- USD CNY PREDICTION

- IP PREDICTION. IP cryptocurrency

- EUR USD PREDICTION

- 🚨 Crypto Chaos: PI’s Drama, XRP’s Moon Shot, ETH’s Wild Ride 🚀💸

2025-10-29 22:01